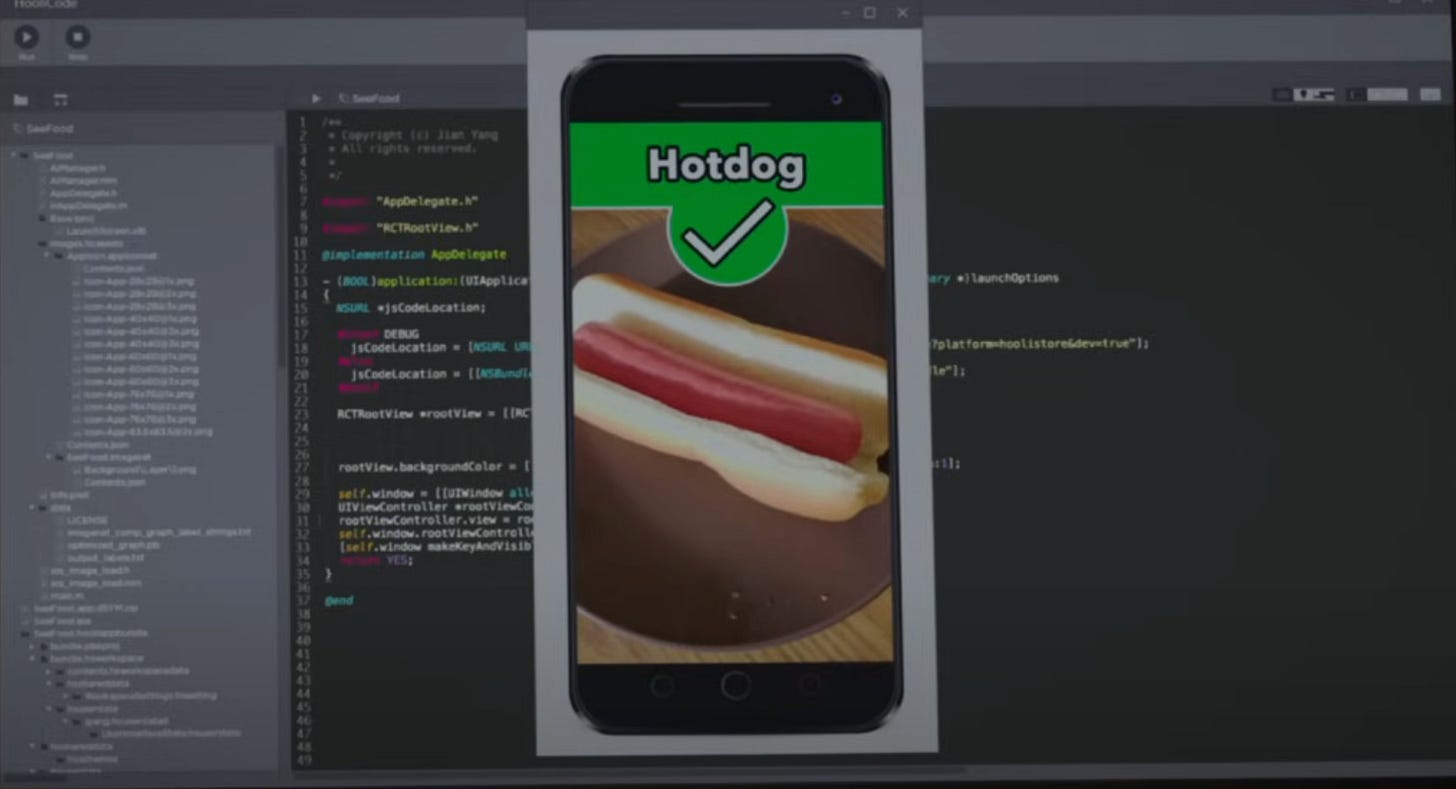

I’ll start off with the thing everyone’s probably busy posting on LinkedIn about, which is the announcement of GPT-4o (the “o” is short for “oh my god I can’t believe I bought a Humane AI Pin / Rabbit R1.”) Honestly I think that the trend toward more and more generally capable models will continue, and the other companies like Google and Anthropic will follow suit. With more and more capability like native voice, search, and photo recognition all in one model, I think the smart phone will be the default device, and not some weird-looking dongle you pin on your jacket or strap on your forehead.

One thing in legal technology that I don’t think enough people are thinking about is the capability of these models to pull information out of images. I’m not talking about the generic “what is this image,” but imagine a person being able to take a photo of their rent demand, eviction complaint, notice of hearing, etc. and having the model explain their situation and recommend next steps.

Anyway, I think a lot of people working on Generative AI solutions for legal aid aren’t thinking in this way, but maybe they should consider it.

Maybe the unlicensed practice of law was the friends we made along the way

In other news, DocuSgn, a legal tech company that does something with contracts, is acquiring Lexion, another legal tech company that does something with contracts. What’s interesting in this article is this bit:

“DocuSign will tap Lexion’s AI models for contract creation and negotiations, while Lexion will build integrations with DocuSign’s products and solutions.”

As Chase Hertel points out, that sure sounds like the unlicensed practice of law. Will regulators care? It always seems to me that the UPL police really only try and enforce against the middle, while the extreme ends (like prison law librarians and multi-million-dollar companies) escape notice.

Other OpenAI news

OpenAI released their model spec, which is basically like the instructions that the model is supposed to follow. It’s worth reading the whole thing, but what’s interesting to me is this:

For advice on sensitive and/or regulated topics (e.g., legal, medical, and financial), the assistant should equip the user with information without providing regulated advice.

Any disclaimer or disclosure should be concise. It should clearly articulate its limitations, i.e., that it cannot provide the regulated advice being requested, and recommend that the user consult a professional as appropriate.

Is this the end of prompt engineering?

Remember when prompt engineering would be the new hotness, with traditional lawyers Susskind’ed to oblivion? Maybe not.

Good riddance. Prompt engineering is so 2023.

Is a special “legal ai product” better that just GPT 4?

This tweet caught my attention because it hits me right in the confirmation bias:

Much to unpack! It’s worth checking out their entire post as well. I’m really tempted to post the picture here of James Stewart admiring the painting of him and his giant rabbit friend, whose name rhymes with “Barvey.” It’s not like any silicon valley legal AI company would ever hire me anyway.

I think the biggest question that “legal AI” companies have to answer is simple - how is their product better than GPT-4 (now GPT-4o)? Unless it’s a RAG-based system, it’s probably not, and even Retrieval Augmented Generation-based systems are using a model like GPT-4. By the way, I don’t know if you know this, but a rudimentary RAG system is dead simple to build now, with pre-built python libraries that do the heavy lifting for you. This is not to say that selling process or uniquely usable interface isn’t valid - it very much is.

The money quote from the post linked above is this (emphasis mine):

The unfortunate fact in all of this is that very few engineers or tech people know how to use lawyers; they exist to point out risk, but it is the domain of the business to choose to accept, mitigate, or avoid it.

That’s all for now. Stay salty.